引言

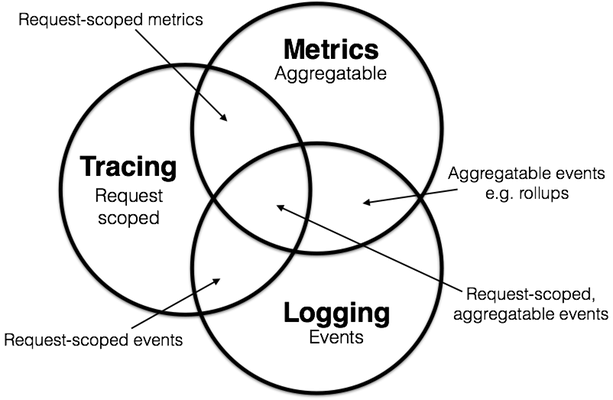

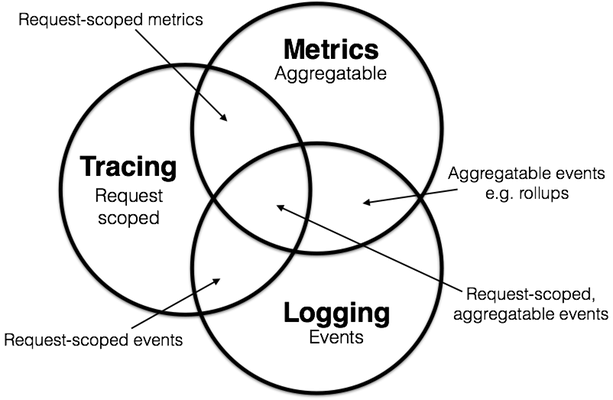

现在很多服务都是分布式架构,众多的微服务部署在云上环境上。所以,我们需要一种机制,来对服务的运行状况来进行观测。因此 可观测性(Observability)这个词也被越来越多的人提及。

简单来讲,可观测性是从系统内部出发,基于白盒化的思路去监测系统内部的运行情况。可观测性贯穿应用开发的整个生命周期,通过分析应用的指标、日志和链路等数据,构建完整的观测模型,从而实现故障诊断、根因分析和快速恢复。

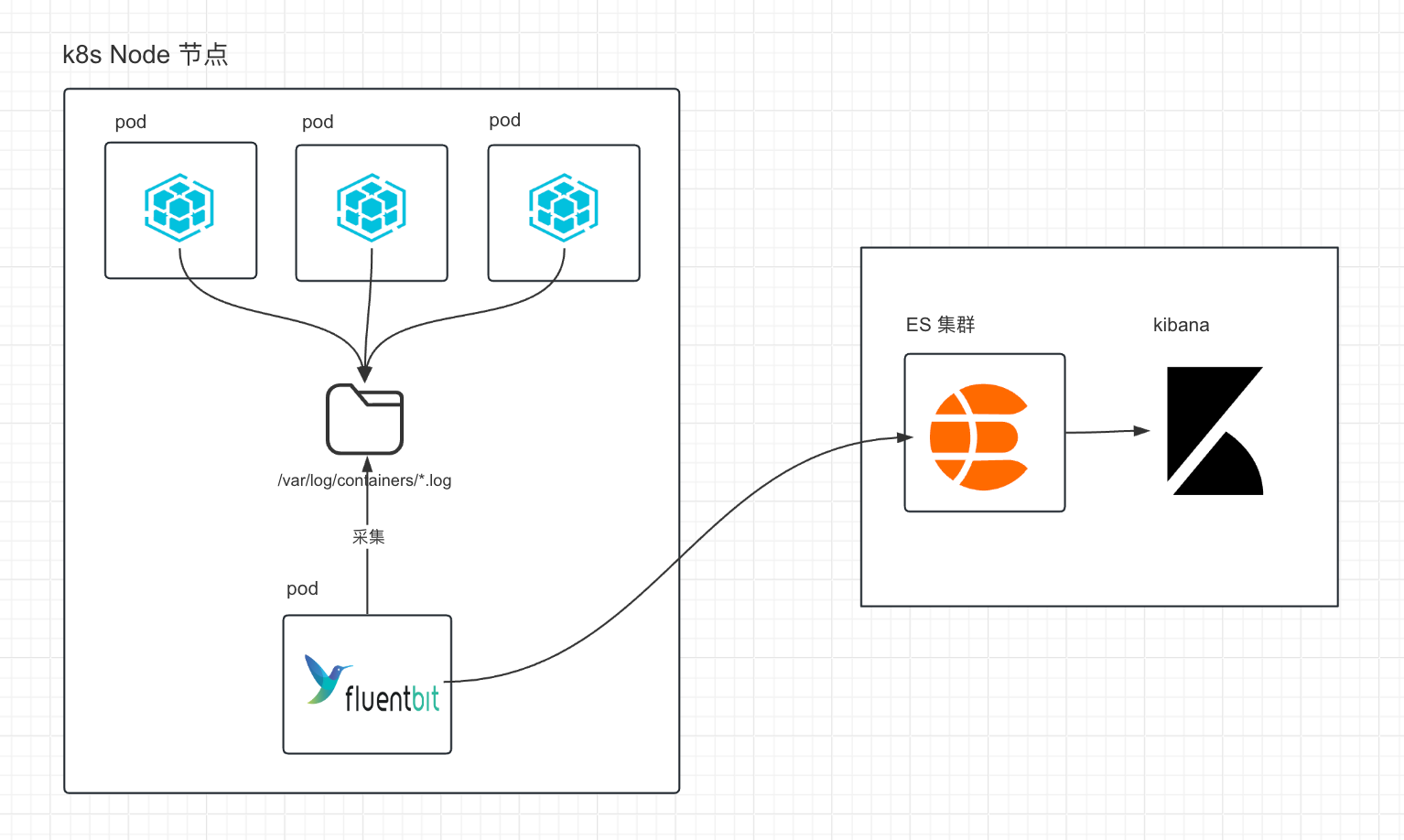

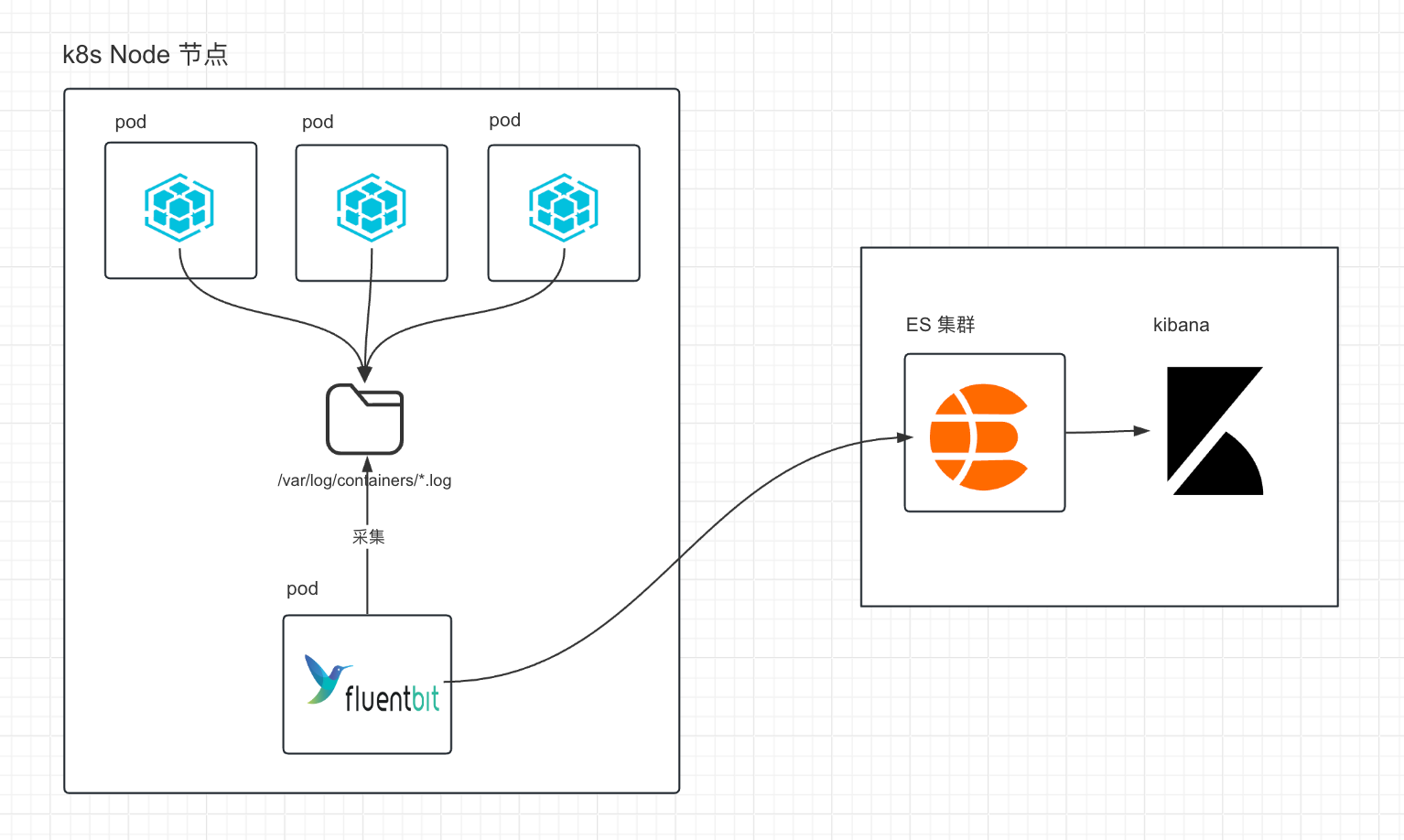

本篇文章,简单聊聊在 k8s 环境下,如何搭建一个可用的集中化日志系统——ELK 来对云上服务进行日志采集和统一展示。

前置知识

DaemonSet

在开始,先简单讲讲什么是 DaemonSet。

DaemonSet官方文档介绍

DaemonSet 确保全部(或者某些)节点上运行一个 Pod 的副本。 当有节点加入集群时, 也会为他们新增一个 Pod 。 当有节点从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod。

DaemonSet 的一些典型用法:

- 在每个节点上运行集群守护进程

- 在每个节点上运行日志收集守护进程

- 在每个节点上运行监控守护进程

ELK 组件介绍

Elasticsearch

Elastic document

用来负责存储最终数据、建立索引和对外提供搜索日志的功能。它是个开源分布式搜索引擎,提供搜集、分析、存储数据三大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Kibana

kibana document

一个优秀的前端日志展示框架,它可以非常详细的将日志转化为各种图表,为用户提供强大的数据可视化支持。

Fluent-Bit

Fluent-Bit document

Fluentd是一个开源的统一数据收集器,为了给你最好用,容易理数据,以统一的数据收集和消费数据。它关键的特性有,尽可能的把数据结构化为json,可插拔的架构,最小化的使用资源,支持缓存避免数据丢失,支持failover,支持高可用,强大的社区。

整体方案概览

部署实操

环境准备

这里假设我们的服务部署在k8s上,ES 和 kibana 则部署在同一网络环境下的虚拟机上.

部署 Elasticsearch

下载 ES

Official Elasticsearch: https://www.elastic.co/downloads/elasticsearch

1

2

| $ curl -O 'https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.12.0-linux-x86_64.tar.gz'

...

|

创建 ES 的数据存储文件夹

Create data and logs directories for Elasticsearch.

1

2

| $ mkdir ~/es_data

$ mkdir ~/es_logs

|

修改 ES 配置文件

1

2

3

4

| $ vim ~/elasticsearch-7.12.0/config/jvm.options

...

$ vim ~/elasticsearch-7.12.0/config/elasticsearch.yml

...

|

运行ES

1

2

| $ nohup ~/elasticsearch-7.12.0/bin/elasticsearch &

...

|

部署 Kibana

1

2

3

4

5

6

| $ docker run \

-d \

--name kb \

-p '127.0.0.1:5602:5601' \

-e ELASTICSEARCH_HOSTS="http://<host>:<port>" \

'docker.elastic.co/kibana/kibana:7.10.1'

|

部署 Fluent-bit (重点)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

|

apiVersion: v1

kind: Namespace

metadata:

name: logging

labels:

name: fluent-bit

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluent-bit-service-account

namespace: logging

labels:

name: fluent-bit

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: fluent-bit-read-role

labels:

name: fluent-bit

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: fluent-bit-role-binding

labels:

name: fluent-bit

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: fluent-bit-read-role

subjects:

- kind: ServiceAccount

name: fluent-bit-service-account

namespace: logging

---

apiVersion: v1

kind: ConfigMap

metadata:

name: fluent-bit-config

namespace: logging

labels:

name: fluent-bit

k8s-app: fluent-bit-config-map

data:

fluent-bit.conf: |

[SERVICE]

Parsers_File /fluent-bit/etc/parsers.conf

[INPUT]

Name tail

Tag docker_logs

Path /var/log/containers/*.log

DB /fluent-bit-db/flb.db

Mem_Buf_Limit 5MB

Skip_Long_Lines Off

Refresh_Interval 10

# =======================

# Output to Elasticsearch

# =======================

[OUTPUT]

Name es

Match loggly_logs

# ------------------------------------------

# Elastic cluster in K8s or Azure VM Cluster

# ------------------------------------------

Host xxx.xxx.xxx.xxx

Port 9200

Type _doc

Generate_ID On

Logstash_Format On

Logstash_Prefix flb_logs

Logstash_DateFormat %Y.%m.%d

# -----------------------------------

# Step 1 -> Docker Logs Format Parser

# -----------------------------------

[FILTER]

Name parser

Match docker_logs

Parser cri

Key_Name log

Reserve_Data On

# ---------------------------------

# Step 2 -> Match Target Log Stream

# ---------------------------------

[FILTER]

Name grep

Match docker_logs

Regex log (loggly|audit)

# ------------------------------------------

# Step 3 -> Remove Unused Docker Record Keys

# ------------------------------------------

[FILTER]

Name record_modifier

Match docker_logs

Remove_key stream

Remove_key time

Remove_key logtag

# ---------------------------------------

# Step 4 -> Parse (Loggly | Audit) Format

# ---------------------------------------

[FILTER]

Name parser

Match docker_logs

Parser loggly_parser

Parser audit_parser

Key_Name log

Reserve_Data Off

# ---------------------------------

# Step 5 -> Parts Log Stream by Tag

# ---------------------------------

[FILTER]

Name rewrite_tag

Match docker_logs

Rule $name loggly loggly_logs false

Rule $name audit audit_logs false

# ----------------------------------------

# Step 6 -> Parse Audit Message Log Format

# ----------------------------------------

[FILTER]

Name parser

Match audit_logs

Parser audit_message_parser

Key_Name message

Reserve_Data Off

parsers.conf: |

[PARSER]

Name cri

Format regex

Regex ^xxxxxxxxxxxxxxxx*)$

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L%z

[PARSER]

Name docker_parser

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

Name loggly_parser

Format regex

Regex ^xxxxxxxxx*)

Time_Key logtime

Time_Format %Y-%m-%d %H:%M:%S.%L

Time_Keep On

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluent-bit-deamonset

namespace: logging

labels:

name: fluent-bit

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

name: fluent-bit-logging

template:

metadata:

labels:

name: fluent-bit-logging

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: fluent-bit

image: fluent/fluent-bit:latest

imagePullPolicy: Always

env:

- name: ACCESS_ID

value: xxxxxx

- name: ACCESS_KEY

value: xxxxxx

volumeMounts:

- name: varlog

mountPath: /var/log

readOnly: true

- name: fluent-bit-persistent-db

mountPath: /fluent-bit-db

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: fluent-bit-persistent-db

hostPath:

path: /var/tmp

- name: fluent-bit-config

configMap:

name: fluent-bit-config

serviceAccountName: fluent-bit-service-account

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

effect: "NoSchedule"

|

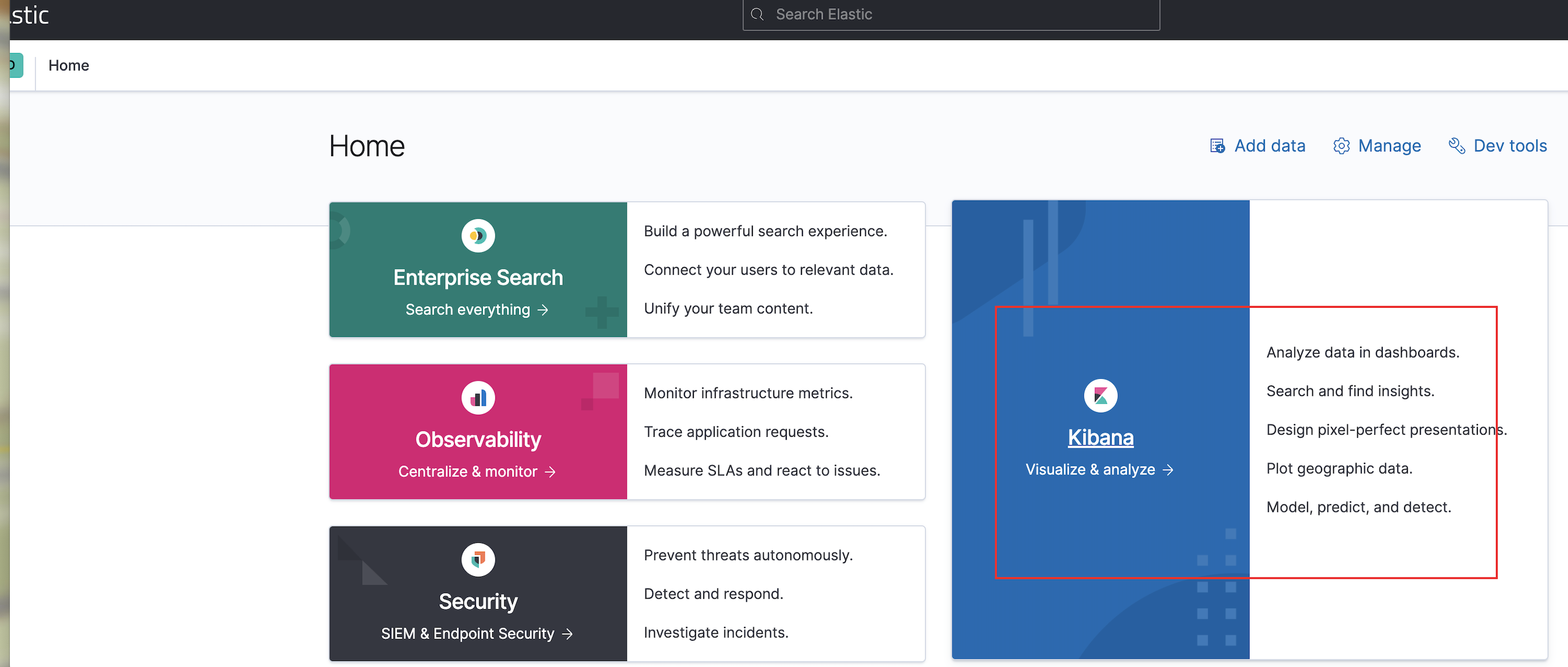

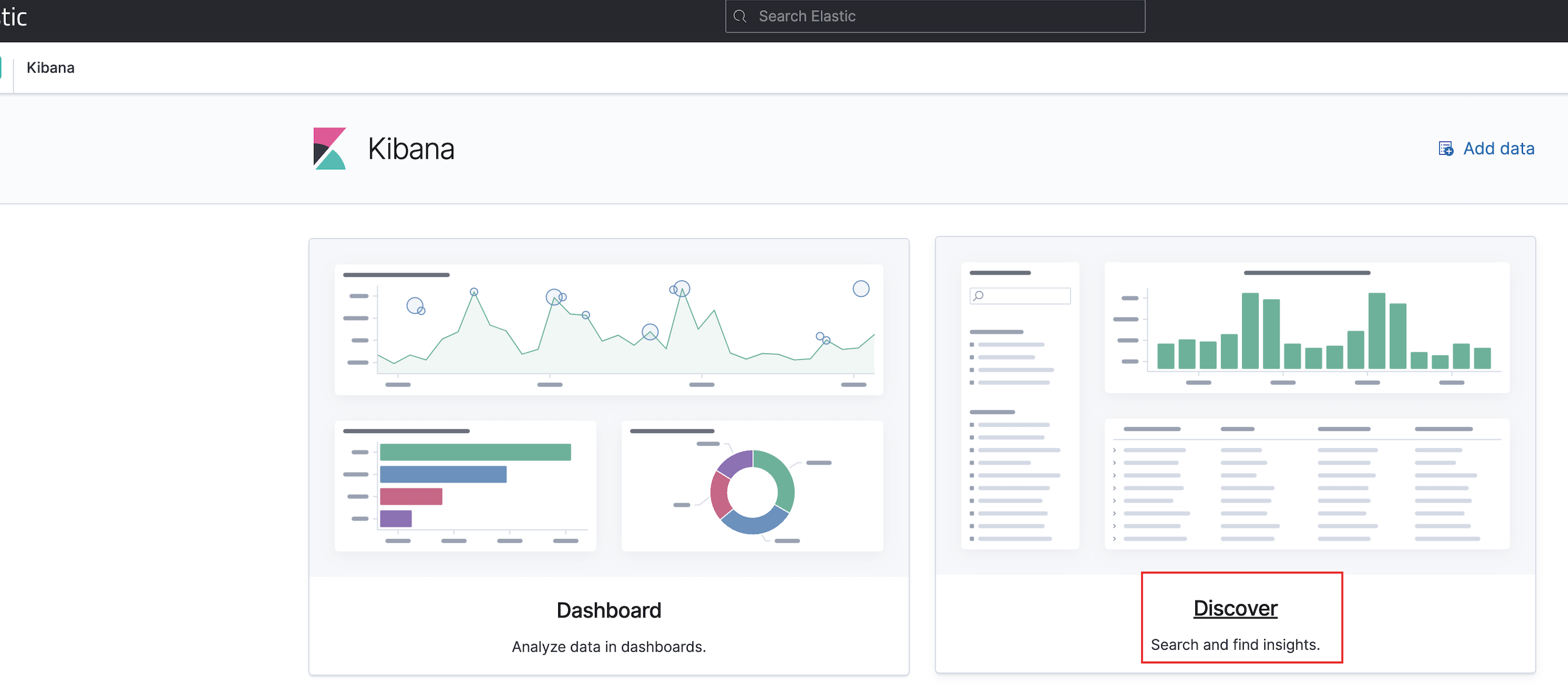

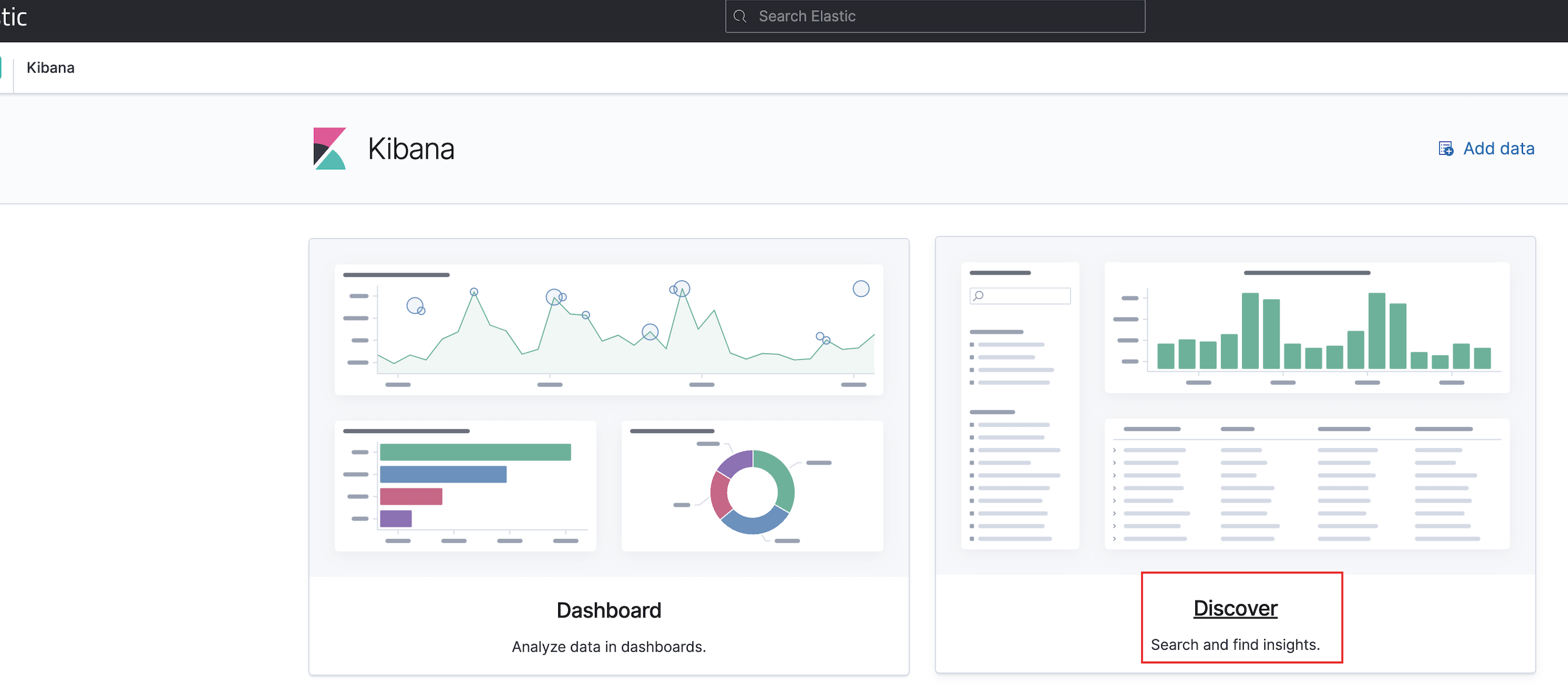

最终展示

最终我们可以登录 kibana 查看日志信息,Kibana 界面示意如下所示

Reference